Reduce Complexity with Variants

Summary: The structure of our data should match the relevant structures in the real world. And to ensure that our data is structured well, we should reduce the potential for incorrect structure. Variants provide a great solution for it.

Introduction

Jeanine Adkisson's talk at the Conj last year was my favorite talk of the Conj. Her presentation was well-delivered, her slides were just quirky enough, and of course the material was so important. You should go watch the talk before you read on.

The problem

In Clojure we often represent things as maps with some implicit rules. For instance, there might be three different ways to represent an image: an array of pixels in memory, a filename for a jpeg file on disk, or a URL of a jpeg on the web. We might have maps that look like this:

{:type :image/in-memory

:pixels [...]}

{:type :image/on-disk

:filename "/cats.jpg"}

{:type :image/web

:url "http://cats.com/cats.jpg"}

This is all well and good. Except it's rife with incidental complexity.

We have an implicit rule that if the :type is :image/in-memory,

then the pixels will be at :pixels, but if the :type is

:image/on-disk, we are expecting a string containing a filename at

:filename, and so on. The rule might seem obvious, but it's actually

kind of cumbersome.

If it's a rule, are we going to enforce it? Where? How strictly? How

often? Enforcement is great, but what do we do if we find an

:image/web without a :url? Decisions, decisions. Plus, it's a

hashmap! It looks like you can

have multiple things. Some lazy programmer is going to reuse those keys.

For instance, there could be a save-to-disk function that takes an

image and writes it to disk. It returns the original image with the

filename where it was saved attached at :filename. Now you've got

:filename and :pixels. It's unclear whether that's what we want, but

it's totally allowed by the data structure.

Actually, if you analyze it, you can quantify the complexity pretty

well. We'll make some simplifying assumptions. Let's just say we have

the type key and the three "value" keys. The type key can have four

possible values (:image/in-memory, :image/on-disk, :image/web, and

nil (or missing)) and the values keys have either a valid value or are

missing (or nil). The number of states is 4 * 2 * 2 * 2 = 32. So

instead of three cases, like we want above, we have 32 cases to deal

with.

Is there a way to reduce this complexity? Is there a way to nip it in

the bud before we've got code enforcing these assumptions all over the

place? Do we really need to drown in assertions and nil checks?

A great solution

Jeanine showed us a well-suited solution. Her solution, as I mentioned before, is called "variants". We have three variants of image representation. Instead of representing them in a map, we represent them in a vector, like this:

[:image/in-memory [...]]

[:image/on-disk "/cats.jpg"]

[:image/web "http://cats.com/cats.jpg"]

How does this help?

Well, let's do the math. There are now two positions where data can

be (instead of four in the hashmap representation). If we make the

same simplifications we made above, we have 4 * 2 = 8. This is

cheating slightly because we're only considering vectors of two elements

or less. But then again, we cheated above because we never considered

adding arbitrary keys to the hashmap.

Okay, so it's 32 vs. 8. But what happens when we add a new kind of

image? In the hashmap version, we're adding a new key and a new :type,

so the new states become 5 * 2 * 2 * 2 * 2 = 80. Woah! And in the

vector version? 5 * 2 = 10. Wow! Much better. The variant solution

actually grows in complexity more slowly than the hashmap solution.

But we've gained something less quantifiable: the data is now easier to write, easier to read, and most importantly, easier to get right. It looks a lot like the tuple pattern we're used to in Clojure. The first value of the tuple is a tag telling you what kind of image it is. As Jeanine pointed out, it's the same pattern employed by hiccup to represent HTML. The first element of a hiccup vector tells you what kind of HTML tag it is.

A plot twist

Now, Jeanine's solution works really well for deeply nested structures like ASTs or HTML trees. However, I always get a little scared when I see vectors being overloaded.

It works okay in hiccup because when you're writing hiccup, you are mostly making big chunks of hiccup. You're not passing hiccup around. Hiccup in your code is dense and will typically only be returned (as opposed to passed to another function). But notice the problem with having to use lists within hiccup to represent sequences. Even in hiccup, people get tripped up. But it's still a great solution.

However, once you start shipping these things around as units of data, they get to be a problem. This has happened to me in the past. You start accumulating values, you have a vector of variants, and all of a sudden you're counting how many nesting levels you've got to know how to interpret each level. You've got a vector of vectors of vectors. It happens everywhere where vectors are overloaded.

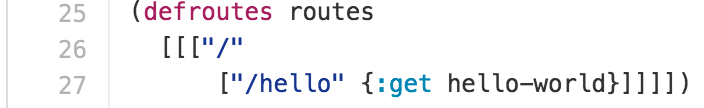

It happens in Pedestal routes. Here's a sample Pedestal route data structure. Notice it starts with three nesting levels. Each of those vectors has a different meaning. Perhaps putting keywords in the front would help, but I suspect that's not a good solution here.

A different solution

I do have a solution to the case of variants that need to get passed around and collected into sequences. I propose that you use a hashmap with a single key, which is the variant's tag. The value for that key is a vector of data.

{:image/in-memory [[...]]} ;; ellipsis represents lots of pixel data

{:image/on-disk ["/dogs.jpg"]}

{:image/web ["http://doggy.com/dogs.jpg"]}

This can still be checked by core.typed and used in core.match. It's natural in Clojure for a hashmap to represent a self-contained value. It's also only slightly harder to type than the vector version, and just as easy to read. I think it's easy, at least, because this is clearly a different pattern from the original hashmap pattern. And it's still easy to get right. I also recommend adding a namespace to the tag to show that that they are related.

The takeaway

When do you use variants?

Whenever you have different data that have the same operations (for instance, all three kinds of images can be displayed to the screen).

When do you use the vector version of variants?

If you have trees like HTML documents or ASTs after parsing.

When do you use the single key hashmap version of variants?

If you are planning on collecting values into a vector, or the nesting is not obvious.

Other ways to reduce complexity

Jeanine went over core.typed and core.match for variants.

By using core.typed, you can encode the exact data structures you want,

eliminate nils, and enforce enumerations (like the :types). You can

eliminate all of the erroneous cases statically and be left with only

the three correct cases (or your code won't compile).

core.match can be used, too, both for convenience (comparing and defining locals in one go), encoding some more complex rules (like extra keys are allowed but ignored), and also collapsing all of the erroneous cases into a single catch-all case. That's still four cases instead of three, but that's way nicer. And core.match works for all of the solutions I've presented (including the original hashmap version).

Conclusion

Variants are a great tool that we should keep up front for lots of jobs.

Their complexity grows much more slowly than the hashmap-with-:type

solution we saw at first. We shoul

d all be considerate of how much

complexity our choice of data representations is adding to our system.

Don't forget the talk.

There is also an interview with

Jeanine I did before the Conj.